Knosis

Augmentation

H2m

Human-to-Machine refers to a data augmentation process where insight and domain knowledge expertise is transferred predominantly from humans to machines

m2h

based on platform-independent display devices and standard software

H2m2H

Human to Machine to Human. We are doing this now by using tools to communicate to each other.

Our primary objective is human-to-machine (H2M) learning: delivering high-fidelity, high-depth augmented data, to be used for training, boosting and validating artificial intelligence. However, throughout our projects and assignments we realized that Knosis helps workers transition into the digital economy by making their skills visible, relevant and valued by a new business landscape, powered by augmented intelligence.

As the boundary between human and machine intelligence is blurring, financial compensation is no longer the only expectation of modern digital knowledge workers. They expect that the skills they learn and develop to have long-term, appreciating value. It is for this reason that Knosis uses patent-pending technology to blend-in machine-to-human (M2H) learning, allowing workers and collaborators to acquire new skills regarding to automation, basic scripting and regular expression, in a visual, mobile-friendly environment .

How does augmentation work? #howItsMade

In order to understand how Knosis works under the hood, we have created a walkthrough intuitive tutorial of our DeepVISS-compliant best practices.

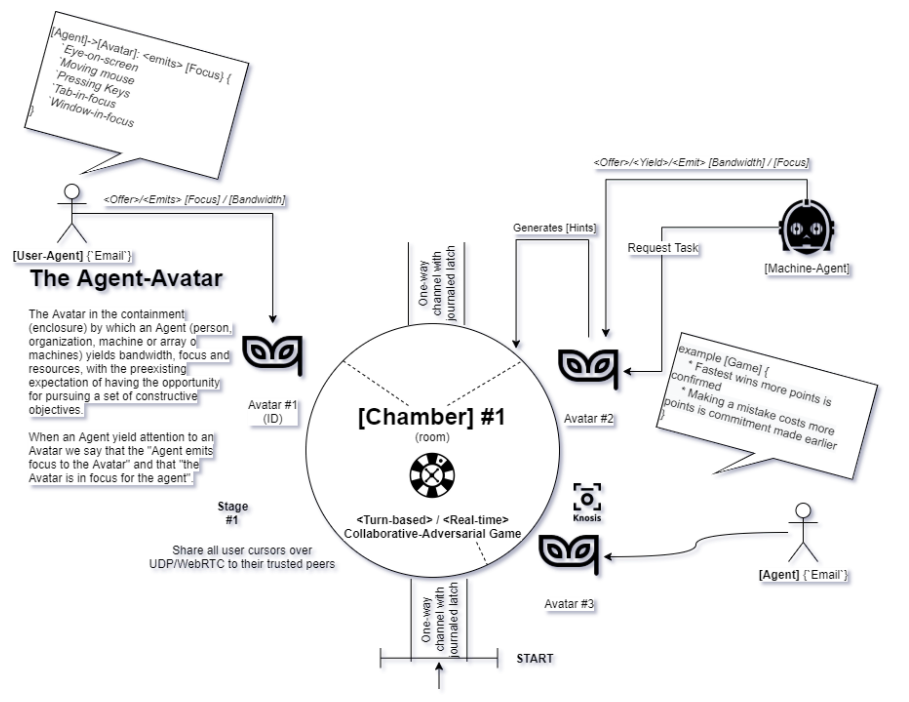

The Knosis data augmentation process comprises several Stages, similar to the way a distillation pipeline works. Each stage is manifested as a Chamber, which is analogous to a timeboxed, purpose-driven chatroom, where participants are Avatars. The Avatar is the privacy-aware container for the identity of an Agent, be it a human (Human-Principal) or a machine (Machine-Principal). Using this Avatar abstraction, we allow users to be confident that their choice of exposing limited information about themselves can be fully insulated from the rest of their PII (personal identifiable information). Moreover, the concept of Chambers allows us to dynamically scale independent sub-processes for data augmentation, tagging, hinting and validation, so that the end-to-end process yields the maximum amount of useful information (negentropy) with the minimum delay (time, end-to-end duration), waste (entropy) or energy-consumption.

Stage Zero: Preparation

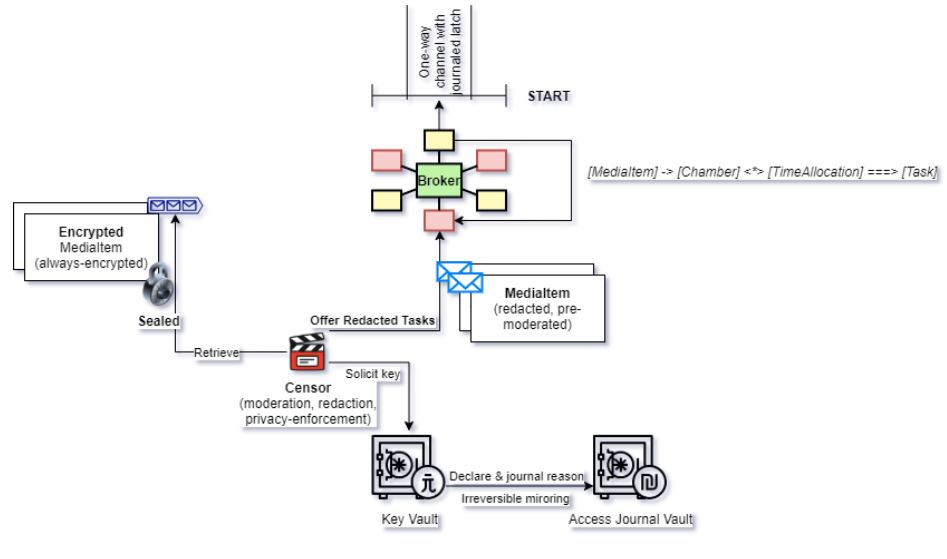

Before starting the data augmentation and tagging process, all the Media-Items pass through a Censor component, which ensures the fulfilment of two concerns:

No PII (personal identifiable information) is accidentally revealed to the human collaborators in the pipeline. While the censor components employs a vast array of tactics to ensure compliance to privacy standard, one method to achieve this is by redacting any recognizable names (of people, places and organization) both from the symbolic form (eg. text, tags, labels, transcription) and from the corresponding graphical representation (eg. the location of the building based on background objects )

No offensive, violent, uncanny or otherwise disturbing imagery is displayed to human collaborators down the pipeline without having the previously notified, well-informed and consented. While Knosis offers automatic content moderation and adult-content filters for situations where sensitive content is expected, we always make sure that the collaborators have a “one-click panic button” to report any anomalous or unforeseen artefact, object or manifestation visible in the media presented for tagging and augmentation.

As an additional on-demand security feature, the Knosis Enterprise Key Vault component allows the operation of almost-always encrypted Media Items, which are encrypted with individually-generated private keys. This way, as you see in the diagram, the Key Vault is compelled to declare to a third-party independent journal (on-site; in-cloud; or on-blockchain) the individual reason for any particular key request, before being able to decrypt and publish the content in the marketplace (Broker).

The Broker component receives from the Censor only Redacted Media Items, which are safe to disseminate on pipelines which have the designation corresponding to those solicited by the Censor.

Stage [1...(n-1)]: Allocating Attention. Gathering and Grouping Hints and Tags. Uncertainty Reaction. Annealing and Culling (validation).

During each Stage, in each Chamber (room), a timeboxed Game is played between the Avatars, in which the Redacted Media Item functions as the game-environment and the objective (functional pursuit or functional closure) of the Task defines the goal of the Game.

Depending on the tagging and augmentation Task at hand, several attributes are considered for the proposed game:

- Information Synchronicity - do Avatars see the inbound information at the same time?

- Information Symmetry - what “part of the map” does each Avatar see?

- Move Symmetry - which types of moves performed by Avatar #2 are seen by Avatar #3 ?

- Instrument Symmetry - what representation/facets of the “map” does each Avatar see?

In choosing a type of Game, there are always trade-offs to consider:

- Sharing all information makes the process more efficient, but less robust to malevolent, incompetent or unfocused Agent-Avatar.

- Sharing no information makes the process more robust, but also more expensive and less efficient.

- Allowing each Agent to act only with information available to it may allow for blindspots and drops in accuracy.

- Allowing each Agent to broadcast information relevant to it to the entire Chamber leaders to Agent fatigue, which decreases the propensity of the Avatar group relying on the externally-available information (i.e. the Chamber gets noisy and spammy and everyone tends to turn-off incoming messages or otherwise shield their attention)

The Knosis Broker components deals with all these trade-offs in dynamic multi-objective optimization, acting as the ML-equivalent de facto of a Demand Side Platform from the OpenRTB landscape.

As you may intuit, not all Avatars need to be backed by a Human Principal, some of them may just as well be algorithms and methods backed by a Machine Principal, as you see in the figure nearby. In this particular case, it is a Machine-Agent that responds with Hints or Tags after it had allocated the bandwidth/focus/attention previously offered to the given Task.

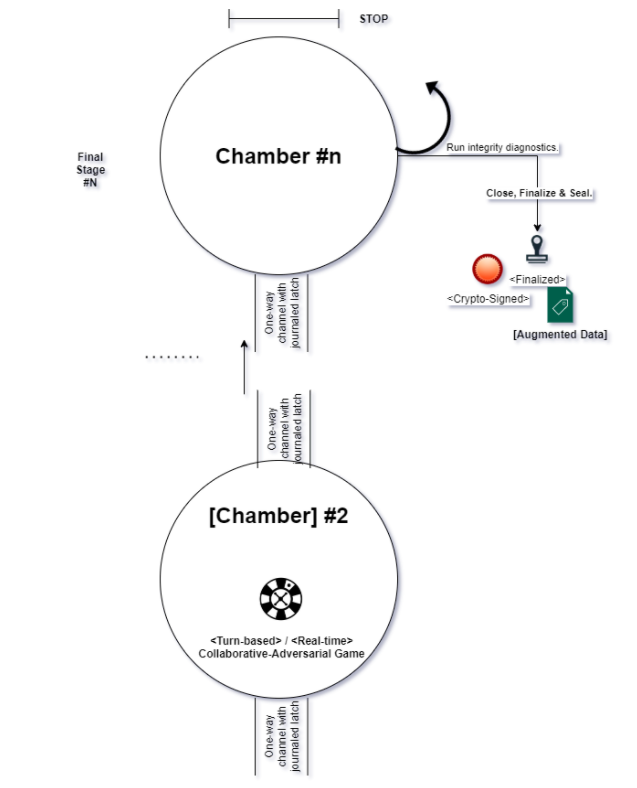

Stage N: Finalization and Seal.

In the final Stage, the Knosis Augmentation component ensures the finalization of any pending validation (including by marking them as “partial”) and the timestamped-cryptographic signing of the resulted data augmentation (eg. tags, transcriptions, relationships).

This procedure is necessary to guarantee privacy enforcement, together with non-repudiation of tagging actions and of consequent final results.